[ad_1]

In a step towards sensible quantum computing, researchers from MIT, Google, and elsewhere have designed a system that may confirm when quantum chips have precisely carried out advanced computations that classical computer systems can’t.

Quantum chips carry out computations utilizing quantum bits, known as “qubits,” that may symbolize the 2 states similar to traditional binary bits — a zero or 1 — or a “quantum superposition” of each states concurrently. The distinctive superposition state can allow quantum computer systems to unravel issues which might be virtually inconceivable for classical computer systems, probably spurring breakthroughs in materials design, drug discovery, and machine studying, amongst different functions.

Full-scale quantum computer systems would require hundreds of thousands of qubits, which isn’t but possible. Prior to now few years, researchers have began growing “Noisy Intermediate Scale Quantum” (NISQ) chips, which include round 50 to 100 qubits. That’s simply sufficient to show “quantum benefit,” that means the NISQ chip can clear up sure algorithms which might be intractable for classical computer systems. Verifying that the chips carried out operations as anticipated, nonetheless, might be very inefficient. The chip’s outputs can look totally random, so it takes a very long time to simulate steps to find out if every part went in response to plan.

In a paper revealed right now in Nature Physics, the researchers describe a novel protocol to effectively confirm that an NISQ chip has carried out all the appropriate quantum operations. They validated their protocol on a notoriously tough quantum drawback working on customized quantum photonic chip.

“As speedy advances in business and academia convey us to the cusp of quantum machines that may outperform classical machines, the duty of quantum verification turns into time vital,” says first creator Jacques Carolan, a postdoc within the Division of Electrical Engineering and Laptop Science (EECS) and the Analysis Laboratory of Electronics (RLE). “Our approach supplies an essential device for verifying a broad class of quantum programs. As a result of if I make investments billions of to construct a quantum chip, it positive higher do one thing attention-grabbing.”

Becoming a member of Carolan on the paper are researchers from EECS and RLE at MIT, as nicely from the Google Quantum AI Laboratory, Elenion Applied sciences, Lightmatter, and Zapata Computing.

Divide and conquer

The researchers’ work basically traces an output quantum state generated by the quantum circuit again to a identified enter state. Doing so reveals which circuit operations had been carried out on the enter to provide the output. These operations ought to at all times match what researchers programmed. If not, the researchers can use the data to pinpoint the place issues went mistaken on the chip.

On the core of the brand new protocol, known as “Variational Quantum Unsampling,” lies a “divide and conquer” method, Carolan says, that breaks the output quantum state into chunks. “As a substitute of doing the entire thing in a single shot, which takes a really very long time, we do that unscrambling layer by layer. This permits us to interrupt the issue as much as deal with it in a extra environment friendly manner,” Carolan says.

For this, the researchers took inspiration from neural networks — which clear up issues by way of many layers of computation — to construct a novel “quantum neural community” (QNN), the place every layer represents a set of quantum operations.

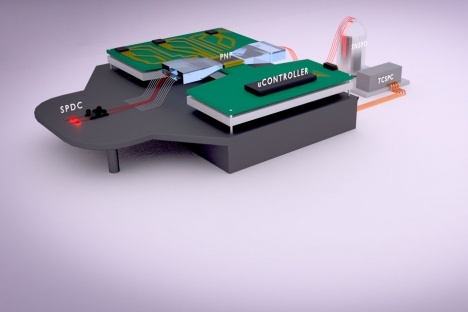

To run the QNN, they used conventional silicon fabrication methods to construct a 2-by-5-millimeter NISQ chip with greater than 170 management parameters — tunable circuit elements that make manipulating the photon path simpler. Pairs of photons are generated at particular wavelengths from an exterior element and injected into the chip. The photons journey by way of the chip’s section shifters — which change the trail of the photons — interfering with one another. This produces a random quantum output state — which represents what would occur throughout computation. The output is measured by an array of exterior photodetector sensors.

That output is distributed to the QNN. The primary layer makes use of advanced optimization methods to dig by way of the noisy output to pinpoint the signature of a single photon amongst all these scrambled collectively. Then, it “unscrambles” that single photon from the group to establish what circuit operations return it to its identified enter state. These operations ought to match precisely the circuit’s particular design for the duty. All subsequent layers do the identical computation — eradicating from the equation any beforehand unscrambled photons — till all photons are unscrambled.

For instance, say the enter state of qubits fed into the processor was all zeroes. The NISQ chip executes a bunch of operations on the qubits to generate a large, seemingly randomly altering quantity as output. (An output quantity will consistently be altering because it’s in a quantum superposition.) The QNN selects chunks of that large quantity. Then, layer by layer, it determines which operations revert every qubit again right down to its enter state of zero. If any operations are completely different from the unique deliberate operations, then one thing has gone awry. Researchers can examine any mismatches between the anticipated output to enter states, and use that data to tweak the circuit design.

Boson “unsampling”

In experiments, the group efficiently ran a well-liked computational activity used to show quantum benefit, known as “boson sampling,” which is often carried out on photonic chips. On this train, section shifters and different optical elements will manipulate and convert a set of enter photons into a special quantum superposition of output photons. In the end, the duty is to calculate the chance that a sure enter state will match a sure output state. That can basically be a pattern from some chance distribution.

Nevertheless it’s almost inconceivable for classical computer systems to compute these samples, because of the unpredictable habits of photons. It’s been theorized that NISQ chips can compute them pretty shortly. Till now, nonetheless, there’s been no approach to confirm that shortly and simply, due to the complexity concerned with the NISQ operations and the duty itself.

“The exact same properties which give these chips quantum computational energy makes them almost inconceivable to confirm,” Carolan says.

In experiments, the researchers had been in a position to “unsample” two photons that had run by way of the boson sampling drawback on their customized NISQ chip — and in a fraction of time it could take conventional verification approaches.

“This is a superb paper that employs a nonlinear quantum neural community to study the unknown unitary operation carried out by a black field,” says Stefano Pirandola, a professor of pc science who focuses on quantum applied sciences on the College of York. “It’s clear that this scheme might be very helpful to confirm the precise gates which might be carried out by a quantum circuit — [for example] by a NISQ processor. From this viewpoint, the scheme serves as an essential benchmarking device for future quantum engineers. The thought was remarkably carried out on a photonic quantum chip.”

Whereas the tactic was designed for quantum verification functions, it may additionally assist seize helpful bodily properties, Carolan says. For example, sure molecules when excited will vibrate, then emit photons primarily based on these vibrations. By injecting these photons right into a photonic chip, Carolan says, the unscrambling approach might be used to find details about the quantum dynamics of these molecules to assist in bioengineering molecular design. It may be used to unscramble photons carrying quantum data which have gathered noise by passing by way of turbulent areas or supplies.

“The dream is to use this to attention-grabbing issues within the bodily world,” Carolan says.

[ad_2]